Generative AI: double-edged sword

Antonio Sanchez at Fortra describes how business should protect themselves against generative AI cyber-crime

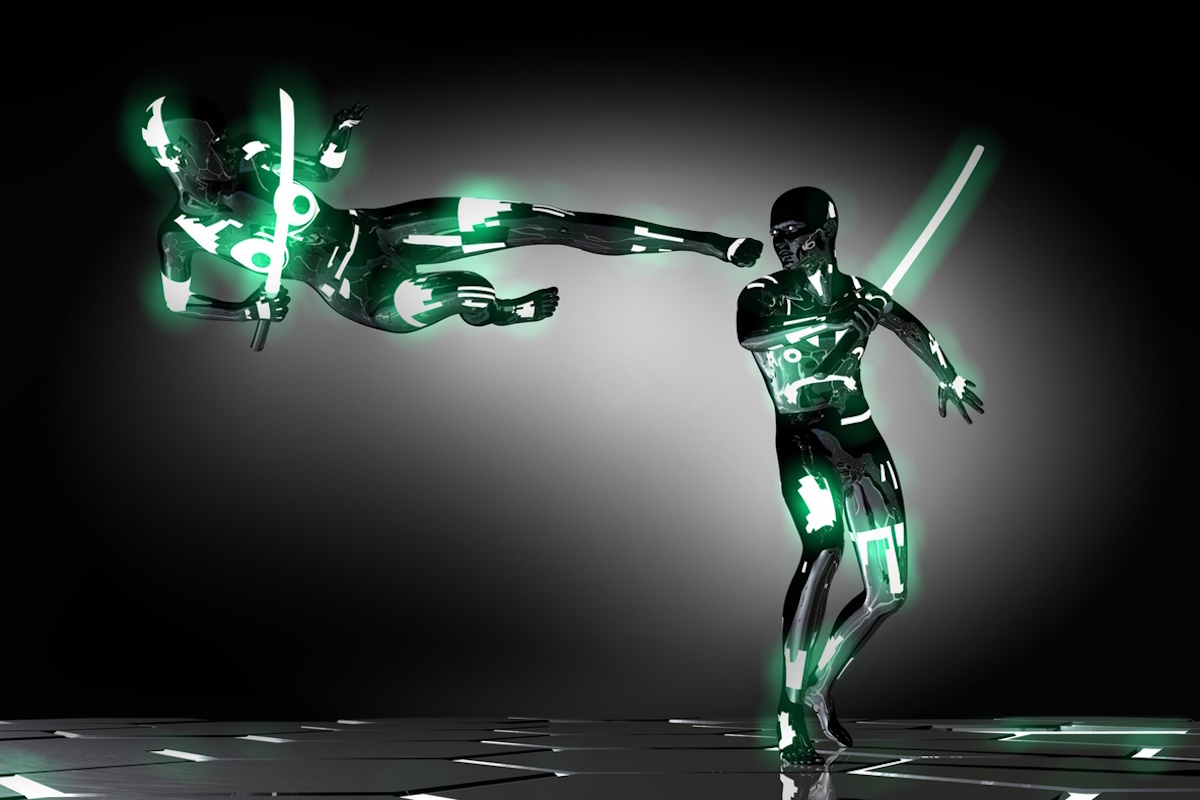

It’s an arms race, and generative AI is both the weapon and the shield that will bring us all into the new era. How it’s used remains to be seen but both sides will continue to use it to get what they want.

ChatGPT: fan-favourite of both sides

On the one hand, threat actors have already leveraged the power of Artificial Intelligence (AI), Generative AI, and Large Language Models (LLMs) to create malicious code, scan source code for exploits, and create countless phishing scams and deepfakes.

On the other hand, security practitioners are using these tools to catch AI-based threats in real time, spot slight variances that could spell bad behaviour, and create code of their own to net even more bad actors.

The race is on, and the winner will be the one that can leverage AI the most, and the best.

The more we, as defenders, are familiar with the technology, its risks and rewards, the better we can position ourselves to keep pace with the criminals– as generative AI can be used both ways.

The AI we’re up against

Generative AI and LLMs have been an absolute boon to attackers. Now, they can do more in less time and not have to be as skilled doing it.

AI and LLMs have lowered the bar for entry to script kiddies and even intermediate attackers looking to do more. With AI, attackers can scan the same code in less time and find just as many opportunities, if not more. They can easily reverse engineer common off-the-shelf software or pry open the open-source code that underpins a lot of companies and products today.

The ease for rapid creation of polymorphic malware is another huge AI win for the cyber-criminal community. This type of malware changes its appearance and morphs its code to evade detection by signature-based and many behavioural detection models. Now lower-level attackers are manipulating ChatGPT queries to churn out polymorphic malware and zero-day attacks with greater ease and greater complexity.

And this is only the beginning. The number of cyber-security risks stemming from generative AI are limited only by people’s creativity. It’s no secret that ChatGPT doesn’t keep secrets, and what’s worse, your information might be used to craft an alarmingly personal phishing email or a highly convincing deepfake.

The only saving grace is that the same generative capabilities available to one party are available to the other. As of now, ChatGPT is still publicly owned and available. While this makes it harder to patrol, it opens the door wide to innovation from both sides.

Using Pandora’s Box for good

From a defender’s point of view, we certainly haven’t wasted time finding productive uses for AI and LLMs on the security side.

First, we’re tackling the perennial problem of “too much work, not enough time” (or staff, or resources, etc.). We’re putting generative AI to work by making it do our mundane tasks. From monitoring network traffic to analysing user behaviour, to writing scripts, we’ve been able to offload a tonne of necessary evils to AI-based bots.

We’re also witnessing a possible win when it comes to widening the arena for hireable candidates. Deep background in security technologies may no longer be a deciding factor when looking to take on new analysts.

More prized would be the ability to find the right answer, but when it comes to staffing your SOC, people with the right problem-solving capabilities could be prioritised rather than human encyclopaedias. Expertise can never be replaced, but having AI to cover technical requirements opens the door to taking on practitioners with a wider-ranging skillset.

And even before ChatGPT came along, we were using AI-based detection and response tools to catch criminals in the act – even when their code is hidden or changed. As more polymorphic malware hits the scene, we can fight fire with fire by using AI to identify highly complex behavioural threats across an ecosystem and learn enough to detect them sooner next time.

This coming year, the threats and security capabilities of generative AI are only going to grow. Cyber-criminals are nearly unstoppable in their tenacity. Security practitioners cannot afford to let them get even one step ahead in understanding, manipulating and optimising the capabilities of generative AI.

The industry is at an exciting crossroads, and one that will only get more interesting as the road goes on. Becoming curious about the creative potential of generative AI is not only going to help defenders fight off this year’s attacks but may ensure digital survival in the future.

An AI-infused world is here, and it’s up to us to make it safe.

Antonio Sanchez is principal cyber-security evangelist at Fortra

Main image courtesy of iStockPhoto.com

Most Viewed

Six key takeaways from CISOs on AI’s role in securitySponsored by Tines

The Expert View: Combatting Cyber-Threats at ScaleSponsored by Infoblox

How putting IT teams back in control can help reduce downtimeSponsored by ManageEngine

Tackling the cyber-threatSponsored by ManageEngine

Winston House, 3rd Floor, Units 306-309, 2-4 Dollis Park, London, N3 1HF

23-29 Hendon Lane, London, N3 1RT

020 8349 4363

© 2025, Lyonsdown Limited. teiss® is a registered trademark of Lyonsdown Ltd. VAT registration number: 830519543